alienware

Banned

So today we review a card that mostly went under the radar.

This was probably because the 6900 Radeons didn't exactly set the world on fire. Sadly most of Nvidia's range were faster for the same money, so making a thoroughbred card with the 6970 core didn't catch imaginations as most of the other Lightning cards did.

A shame, but not the end of the world.

In case you are unfamiliar with MSI's Lightning range of cards I will do my best to briefly sum up what they are. What MSI usually do is take a core and then go to work designing a card around said core. They use custom capacitors, custom VRMs and top notch "Military Grade" components to ensure that you end up with a card that can surpass a stock variant in every way. And to that ends the 6970 Lightning is a success in every aspect.

First up let's take a quick look over the PCB of a stock standard 6970 card.

Meh. Just, meh. It's adequate. It's good enough, it's nice. But it isn't very special is it? This is something that OEMs tend to do. They make something that is up to the task, yet nothing more. That would be why MSI have been so successful in recent years, as they know what the end user wants.

We want -

Bigger.

More powerful.

Easy on the eyes.

A unique standout part.

So that's why when you take a look at the PCB of the Lightning card the differences are immediately apparent.

If you don't quite remember then cast your minds back to the GTX 480 Lightning card. It was an absolute violent work of art. A thuggish, brutish masterpiece that dominated the GPU world for ages. Infact, I seem to remember that it still holds the world GPU Vantage record.

That's no mean feat, because the GTX 480 was hailed an overall failure. So can MSI take another failure and turn it into a success? let'f find out shall we?

First of all before I say another word you'd better bust out the ruler. This card takes the rulebook and tears it into shreds.

Yes, that is a GTX 470 and yes, Battlefield 3 rendered it useless with its insatiable need for VRAM. But as you can probably guess from looking at the picture that card is big . It measures a case busting 31cm long for the PCB, with the monstrous cooler hanging over at least another centimetre. This means you are going to need thirteen inches of space between your case's PCI slot plate and the front of your case. If you wanted to know what the card looks like compared to a stock 6970 then want no more.

So now that we know what we are up against, what else does the 6970 Lightning have to offer us?

Well, aboard the back of the card are a plethora of switches. To the laymen these switches will remain "unswitched?". However, to those that want a laugh and don't care about burning a whopping great hole in the Ozone layer then they are there for our entertainment. Firstly there is a rather large switch. At each end of the switch you will see the words Silence and Performance.

Apparently this was an error on MSI's part, and they mean no such thing. Basically in Silence mode the card will allow you to overclock to just over 1ghz with a maximum voltage of 1.35v. When you throw the switch however? Hello 2ghz and 2 volts. Now obviously this switch has been designed purely with LN2 overclockers in mind, so you won't want to play with that part unless you have extreme cooling to hand. Otherwise you will simply blow the card up.

Also on the back are numerous other switches for overclocking, overvolting and even memory voltage unlocking.

Again, I would not advise breaking off the skin and throwing said switches, because MSI have very cleverly left us all of the safe settings we need for permanent safe overclocks without allowing us to open Pandora's box and wander from the garden of eden. And this is nice, because to most of us this card will need to be our day to day card.

Also note that MSI have torn up the rulebook on PCIE power usage and put two 8 pin connectors onto the card.

And also, as with all of their other Lightning cards, fitted voltage probe sockets.

So what use do all these extras perform then? Well, sadly even though this card offers all of these overclocking niceties you still have to play the AMD silicon lottery. Thankfully though a good portion of problematic overclocks are caused by insufficient power stages.

Time for overclocking.

The Lightning card is factory overclocked out of the box. Instead of the traditional 880mhz 1175mv fare it comes out of the box with a 940mhz stock speed at the same voltage. However, start to push the clocks and you begin to realise just how important the power stages are. It took me an eye watering 1250mv to get my card totally stable in Kombustor at 960mhz. However, as 6 series Radeons go this is quite a mighty overclock.

And our 3Dmark11 score, coming from a Intel I7 950 at stock speed.

Pushing on I managed to get the card stable at 1035mhz with 1350mv. Sadly not only did this scare the crap out of me (I'm no DICE overclocker !) it also proved too much for the AMD throttle. Everything was perfectly stable, yet 3DMark11 returned a score of 4800 points. I would strongly imagine the core's thermal throttle was kicking in and I was very tentative about removing it, given that it's not a case of Gung ho, thrash til she blows !

However the 960mhz overclock with 1250mv has remained stable and steadfast, providing some excellent gaming results.

So onto the reason I bought this card

The mighty GPU slaying Battlefield 3.

As you may have noted above I used to have a GTX 470 with a Zalman V3000f cooler attached to it. I bought this card in October of 2010 on special, and the cooler also. Sadly when running Battlefield 3 I was running into stutter problems. This was odd, because when I looked around me I was seeing perfectly acceptable benchmarks using my card. Infact, the GTX 470 was only 6 or 7 frames away from the stock 6970L. So what gives? Well, it took me a while to figure out, but Battlefield 3 looks the way it does for a reason. I'm none too happy about this reason, because it simply confirms what I thought about Direct X 11 being a poor API.

Instead of the API taking care of the drawing and complex patterns the game has simply been stuffed full of enormous great high resolution textures. This is far from ideal, because not only do these textures gorge on vram but they also require serious brute force to get them moving. And when you add FSAA into the mix? vram levels rocket. Not just rocket to high end levels, but rocket to levels that most Nvidia cards can not provide.

I knew then that it was time to upgrade, and so I focused my attention to the GTX 570. Sadly, though, users of several forums were also logging serious doubts about the card's ability with Battlefield 3 when in heavy multiplayer scenes. After lots of research I found a thread where they had finally realised the issue, and had started to report back with vram usage levels.

And, with the settings on ultra, at 1080p and with 4XFSAA the game was logging a usage of an eye watering 1608mb of vram. So finally AMD's completely frivolous overkill of vram could come into its own.

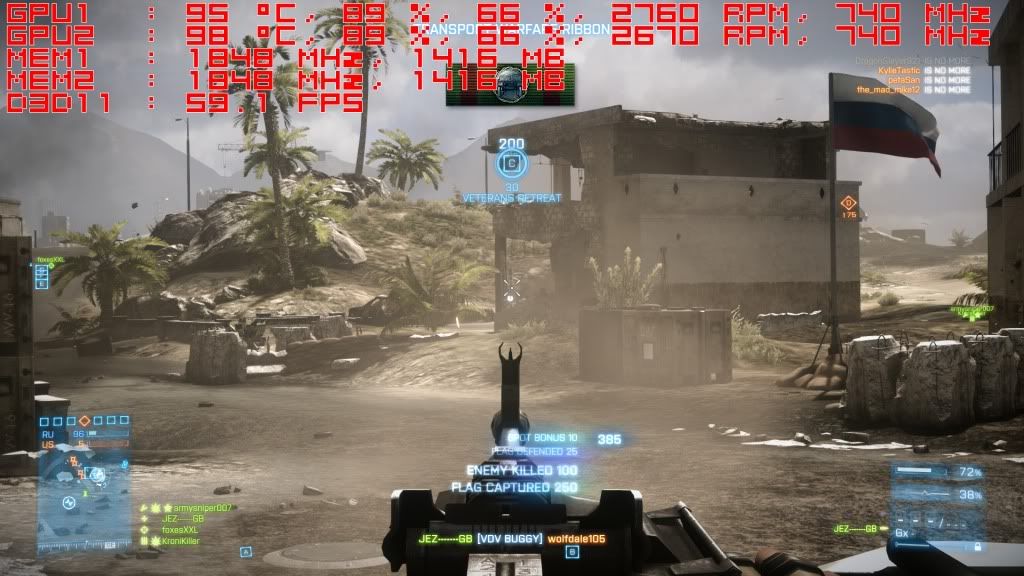

So I started up the game and ran FRAPS to measure FPS and GPUZ to log out vram usage. In single player in the tank stages I measured this.

And it did not get any better sadly. Levels when storming the city at night soared to 1480mb of vram. And that is without taking into account multiplayer with countless other soldiers on the field.

It's funny really. I never thought that a game's benchmark would ever consist of vram usage as the benchmark itself but Battlefield 3 just takes the biscuit.

I'm sure you are all sitting there saying "Yeah yeah, shut up and give us the FPS count". 45. The game basically sat jammed at 45 FPS as the important number. Obviously at times it shot up to 60, hitting the Vsync, but for the most part it sat at a rock solid 45 FPS with the card at stock, and 48 with the card overclocked to 960mhz.

So where are all the other benchmarks? they don't matter. The entire existence of a graphics card is now based around how it can run Battlefield 3. If it can do that, on ultra, with everything switched on and 4XFSAA?

It's man enough for any task.

Conclusion

So come on then. Let's answer the burning question.

Is this card a worthy successor to the 480 Lightning?

No. It isn't. And this is down to nothing more than the 6970 core itself being rubbish for overclocking. So what's the point, you may ask?

Firstly this card will very easily out-clock and out-cool a standard Radeon 6970. Not only that but it will remain very stable and can take a punishing amount of voltage. The cooler itself is pretty quiet, but what is nice is that the audible noise it makes is not that horrible hollow blower hoover sound. It's quite a nice whoosh, and something that is easy to live with. I'm sure that with more knowledge I could have quite easily gotten the card to 1ghz stable and with acceptable temps but in honesty there was no point.

However, you need to remember that this card costs a mere £320. Which when you compare that to the wallet busting price tag attached to the 480 Lightning? it's a bargain. Even better still Overclockers UK have a batch of cards that failed to make the 940mhz stock speed with stock voltage for £267 that are bundled with £60 worth of games. And when you consider that the card can easily be made stable by pushing up the voltage? it makes the package even more attractive.

What the card has in its corner, and what was the deciding factor for me was the vram. You will note that the new 7970 has 3gb of vram. Trust me when I say, this is not a gimmick. Give it another year and no doubt I will be upgrading again as 2gb won't be enough.

For

It's a Lightning card. This means you can expect a build quality bordering on the insane.

It's built like a tank, and has looks to match.

It can take a total pummelling, easily putting it in front of any stock 6970 card, but without the price tag.

It can handle anything you throw at it at 1080p.

It can take the rather poor 6970 core and get it stable at 1ghz and more.

It has plenty of vram.

It can be had for a very reasonable price compared to other Lightning models.

Against

It's still a 6970 core so you won't be breaking any world records for air cooling.

It's still in a "six of one" fight with the GTX 570 that costs less.

It uses a lot of power.

If you were expecting this to be the AMD equivalent of the 480 Lightning you will be dissapointed.

The cooler isn't as nice as the 480 Lightning, nor as unique.

I would like to thank the other sites I used pictures from. This includes Guru3D and hardOCP.

This was probably because the 6900 Radeons didn't exactly set the world on fire. Sadly most of Nvidia's range were faster for the same money, so making a thoroughbred card with the 6970 core didn't catch imaginations as most of the other Lightning cards did.

A shame, but not the end of the world.

In case you are unfamiliar with MSI's Lightning range of cards I will do my best to briefly sum up what they are. What MSI usually do is take a core and then go to work designing a card around said core. They use custom capacitors, custom VRMs and top notch "Military Grade" components to ensure that you end up with a card that can surpass a stock variant in every way. And to that ends the 6970 Lightning is a success in every aspect.

First up let's take a quick look over the PCB of a stock standard 6970 card.

Meh. Just, meh. It's adequate. It's good enough, it's nice. But it isn't very special is it? This is something that OEMs tend to do. They make something that is up to the task, yet nothing more. That would be why MSI have been so successful in recent years, as they know what the end user wants.

We want -

Bigger.

More powerful.

Easy on the eyes.

A unique standout part.

So that's why when you take a look at the PCB of the Lightning card the differences are immediately apparent.

If you don't quite remember then cast your minds back to the GTX 480 Lightning card. It was an absolute violent work of art. A thuggish, brutish masterpiece that dominated the GPU world for ages. Infact, I seem to remember that it still holds the world GPU Vantage record.

That's no mean feat, because the GTX 480 was hailed an overall failure. So can MSI take another failure and turn it into a success? let'f find out shall we?

First of all before I say another word you'd better bust out the ruler. This card takes the rulebook and tears it into shreds.

Yes, that is a GTX 470 and yes, Battlefield 3 rendered it useless with its insatiable need for VRAM. But as you can probably guess from looking at the picture that card is big . It measures a case busting 31cm long for the PCB, with the monstrous cooler hanging over at least another centimetre. This means you are going to need thirteen inches of space between your case's PCI slot plate and the front of your case. If you wanted to know what the card looks like compared to a stock 6970 then want no more.

So now that we know what we are up against, what else does the 6970 Lightning have to offer us?

Well, aboard the back of the card are a plethora of switches. To the laymen these switches will remain "unswitched?". However, to those that want a laugh and don't care about burning a whopping great hole in the Ozone layer then they are there for our entertainment. Firstly there is a rather large switch. At each end of the switch you will see the words Silence and Performance.

Apparently this was an error on MSI's part, and they mean no such thing. Basically in Silence mode the card will allow you to overclock to just over 1ghz with a maximum voltage of 1.35v. When you throw the switch however? Hello 2ghz and 2 volts. Now obviously this switch has been designed purely with LN2 overclockers in mind, so you won't want to play with that part unless you have extreme cooling to hand. Otherwise you will simply blow the card up.

Also on the back are numerous other switches for overclocking, overvolting and even memory voltage unlocking.

Again, I would not advise breaking off the skin and throwing said switches, because MSI have very cleverly left us all of the safe settings we need for permanent safe overclocks without allowing us to open Pandora's box and wander from the garden of eden. And this is nice, because to most of us this card will need to be our day to day card.

Also note that MSI have torn up the rulebook on PCIE power usage and put two 8 pin connectors onto the card.

And also, as with all of their other Lightning cards, fitted voltage probe sockets.

So what use do all these extras perform then? Well, sadly even though this card offers all of these overclocking niceties you still have to play the AMD silicon lottery. Thankfully though a good portion of problematic overclocks are caused by insufficient power stages.

Time for overclocking.

The Lightning card is factory overclocked out of the box. Instead of the traditional 880mhz 1175mv fare it comes out of the box with a 940mhz stock speed at the same voltage. However, start to push the clocks and you begin to realise just how important the power stages are. It took me an eye watering 1250mv to get my card totally stable in Kombustor at 960mhz. However, as 6 series Radeons go this is quite a mighty overclock.

And our 3Dmark11 score, coming from a Intel I7 950 at stock speed.

Pushing on I managed to get the card stable at 1035mhz with 1350mv. Sadly not only did this scare the crap out of me (I'm no DICE overclocker !) it also proved too much for the AMD throttle. Everything was perfectly stable, yet 3DMark11 returned a score of 4800 points. I would strongly imagine the core's thermal throttle was kicking in and I was very tentative about removing it, given that it's not a case of Gung ho, thrash til she blows !

However the 960mhz overclock with 1250mv has remained stable and steadfast, providing some excellent gaming results.

So onto the reason I bought this card

The mighty GPU slaying Battlefield 3.

As you may have noted above I used to have a GTX 470 with a Zalman V3000f cooler attached to it. I bought this card in October of 2010 on special, and the cooler also. Sadly when running Battlefield 3 I was running into stutter problems. This was odd, because when I looked around me I was seeing perfectly acceptable benchmarks using my card. Infact, the GTX 470 was only 6 or 7 frames away from the stock 6970L. So what gives? Well, it took me a while to figure out, but Battlefield 3 looks the way it does for a reason. I'm none too happy about this reason, because it simply confirms what I thought about Direct X 11 being a poor API.

Instead of the API taking care of the drawing and complex patterns the game has simply been stuffed full of enormous great high resolution textures. This is far from ideal, because not only do these textures gorge on vram but they also require serious brute force to get them moving. And when you add FSAA into the mix? vram levels rocket. Not just rocket to high end levels, but rocket to levels that most Nvidia cards can not provide.

I knew then that it was time to upgrade, and so I focused my attention to the GTX 570. Sadly, though, users of several forums were also logging serious doubts about the card's ability with Battlefield 3 when in heavy multiplayer scenes. After lots of research I found a thread where they had finally realised the issue, and had started to report back with vram usage levels.

And, with the settings on ultra, at 1080p and with 4XFSAA the game was logging a usage of an eye watering 1608mb of vram. So finally AMD's completely frivolous overkill of vram could come into its own.

So I started up the game and ran FRAPS to measure FPS and GPUZ to log out vram usage. In single player in the tank stages I measured this.

And it did not get any better sadly. Levels when storming the city at night soared to 1480mb of vram. And that is without taking into account multiplayer with countless other soldiers on the field.

It's funny really. I never thought that a game's benchmark would ever consist of vram usage as the benchmark itself but Battlefield 3 just takes the biscuit.

I'm sure you are all sitting there saying "Yeah yeah, shut up and give us the FPS count". 45. The game basically sat jammed at 45 FPS as the important number. Obviously at times it shot up to 60, hitting the Vsync, but for the most part it sat at a rock solid 45 FPS with the card at stock, and 48 with the card overclocked to 960mhz.

So where are all the other benchmarks? they don't matter. The entire existence of a graphics card is now based around how it can run Battlefield 3. If it can do that, on ultra, with everything switched on and 4XFSAA?

It's man enough for any task.

Conclusion

So come on then. Let's answer the burning question.

Is this card a worthy successor to the 480 Lightning?

No. It isn't. And this is down to nothing more than the 6970 core itself being rubbish for overclocking. So what's the point, you may ask?

Firstly this card will very easily out-clock and out-cool a standard Radeon 6970. Not only that but it will remain very stable and can take a punishing amount of voltage. The cooler itself is pretty quiet, but what is nice is that the audible noise it makes is not that horrible hollow blower hoover sound. It's quite a nice whoosh, and something that is easy to live with. I'm sure that with more knowledge I could have quite easily gotten the card to 1ghz stable and with acceptable temps but in honesty there was no point.

However, you need to remember that this card costs a mere £320. Which when you compare that to the wallet busting price tag attached to the 480 Lightning? it's a bargain. Even better still Overclockers UK have a batch of cards that failed to make the 940mhz stock speed with stock voltage for £267 that are bundled with £60 worth of games. And when you consider that the card can easily be made stable by pushing up the voltage? it makes the package even more attractive.

What the card has in its corner, and what was the deciding factor for me was the vram. You will note that the new 7970 has 3gb of vram. Trust me when I say, this is not a gimmick. Give it another year and no doubt I will be upgrading again as 2gb won't be enough.

For

It's a Lightning card. This means you can expect a build quality bordering on the insane.

It's built like a tank, and has looks to match.

It can take a total pummelling, easily putting it in front of any stock 6970 card, but without the price tag.

It can handle anything you throw at it at 1080p.

It can take the rather poor 6970 core and get it stable at 1ghz and more.

It has plenty of vram.

It can be had for a very reasonable price compared to other Lightning models.

Against

It's still a 6970 core so you won't be breaking any world records for air cooling.

It's still in a "six of one" fight with the GTX 570 that costs less.

It uses a lot of power.

If you were expecting this to be the AMD equivalent of the 480 Lightning you will be dissapointed.

The cooler isn't as nice as the 480 Lightning, nor as unique.

I would like to thank the other sites I used pictures from. This includes Guru3D and hardOCP.