Kei

Member

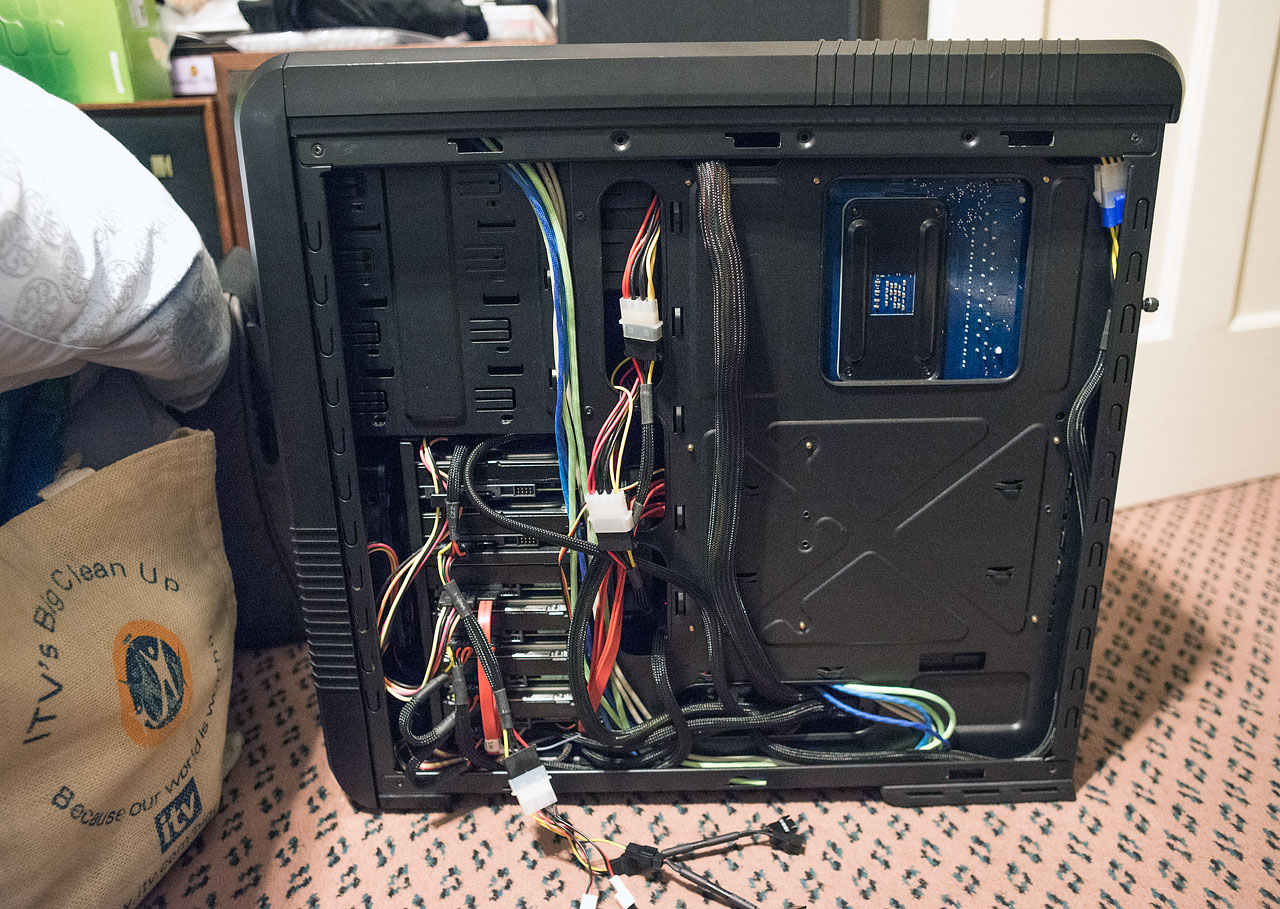

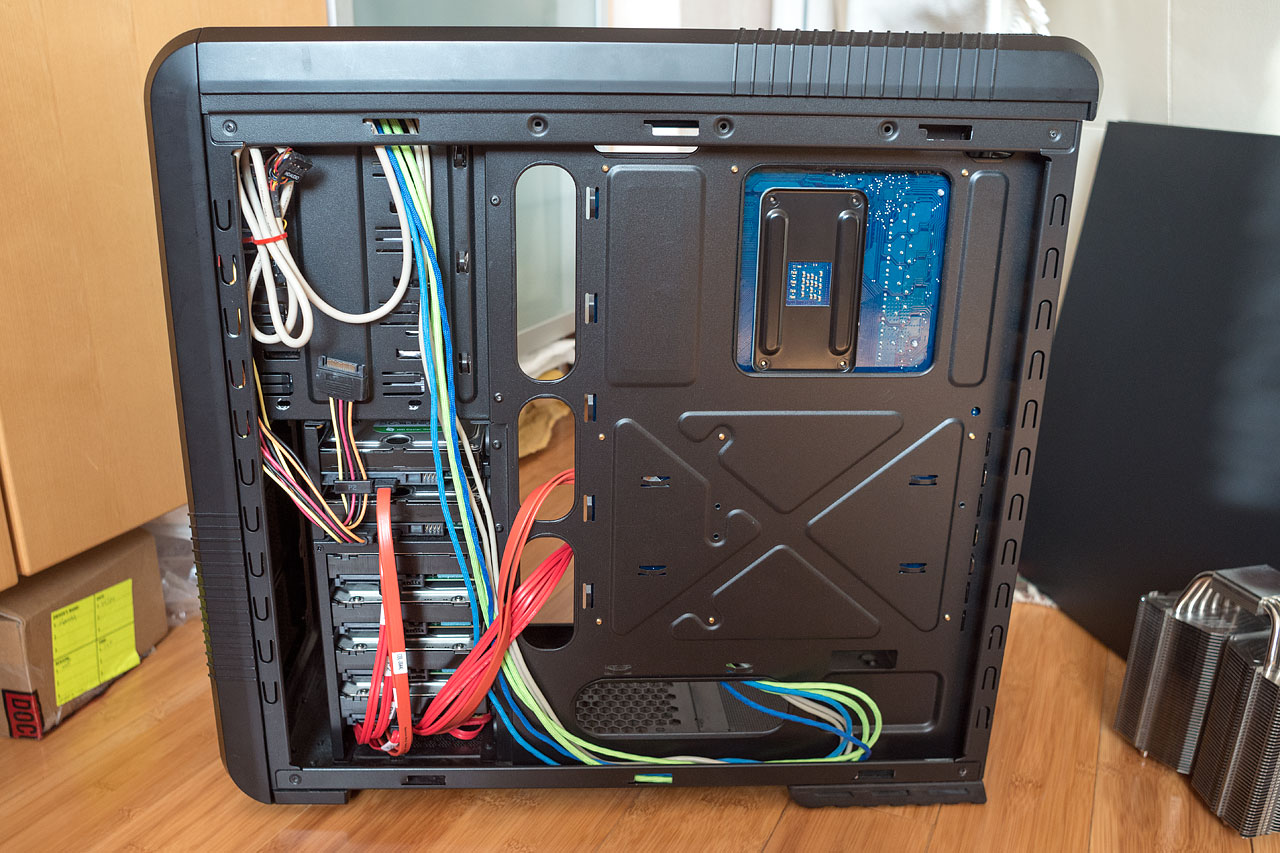

Decided I wanted to build a server/nas to help with backups etc so I've cobbled together all of my spare parts and collected a few freebies across the last few months. Here's how it looks at present:

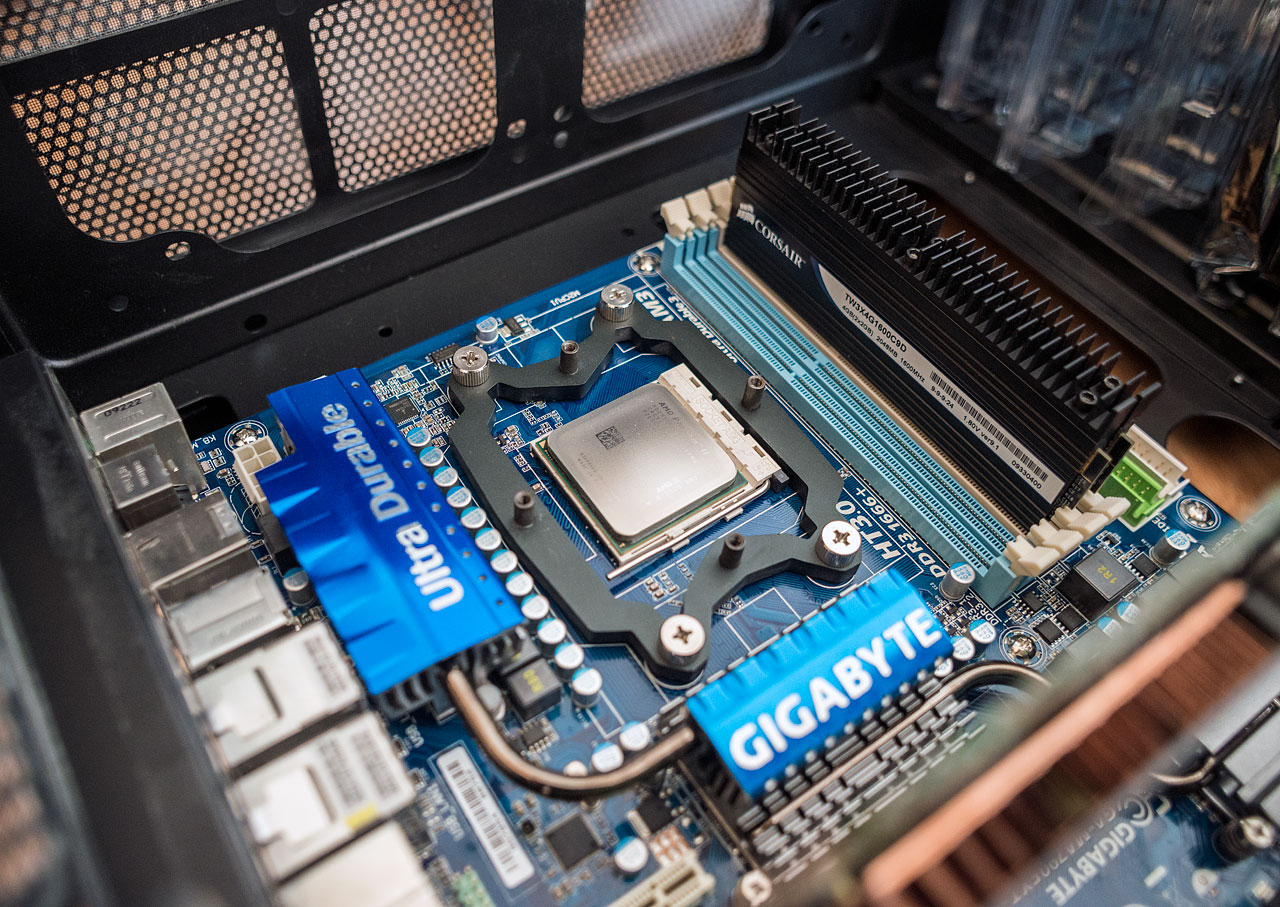

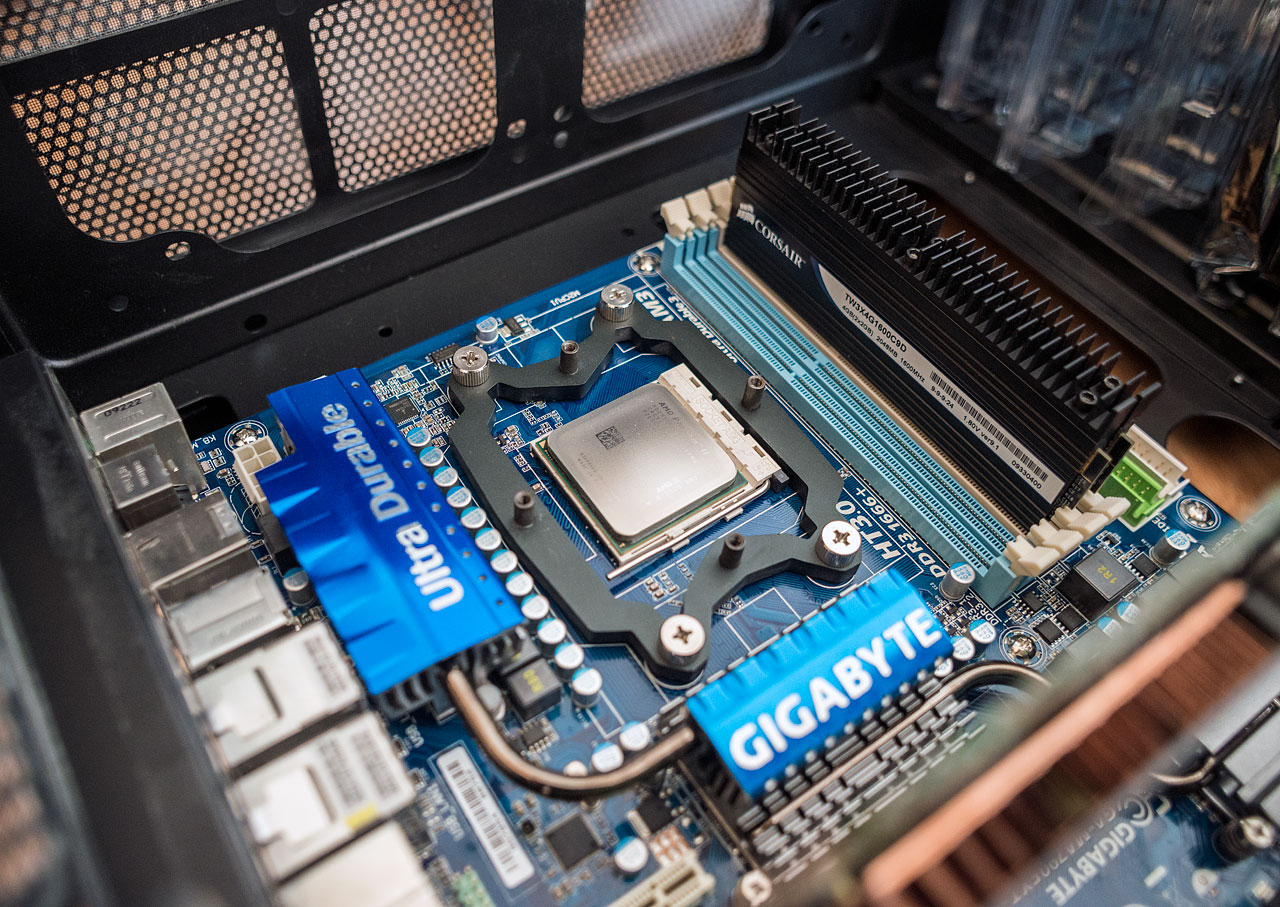

Gigabyte MA-790FXT-UD5P

AMD Phenom II X4 955

Corsair Dominator 4GB 1600

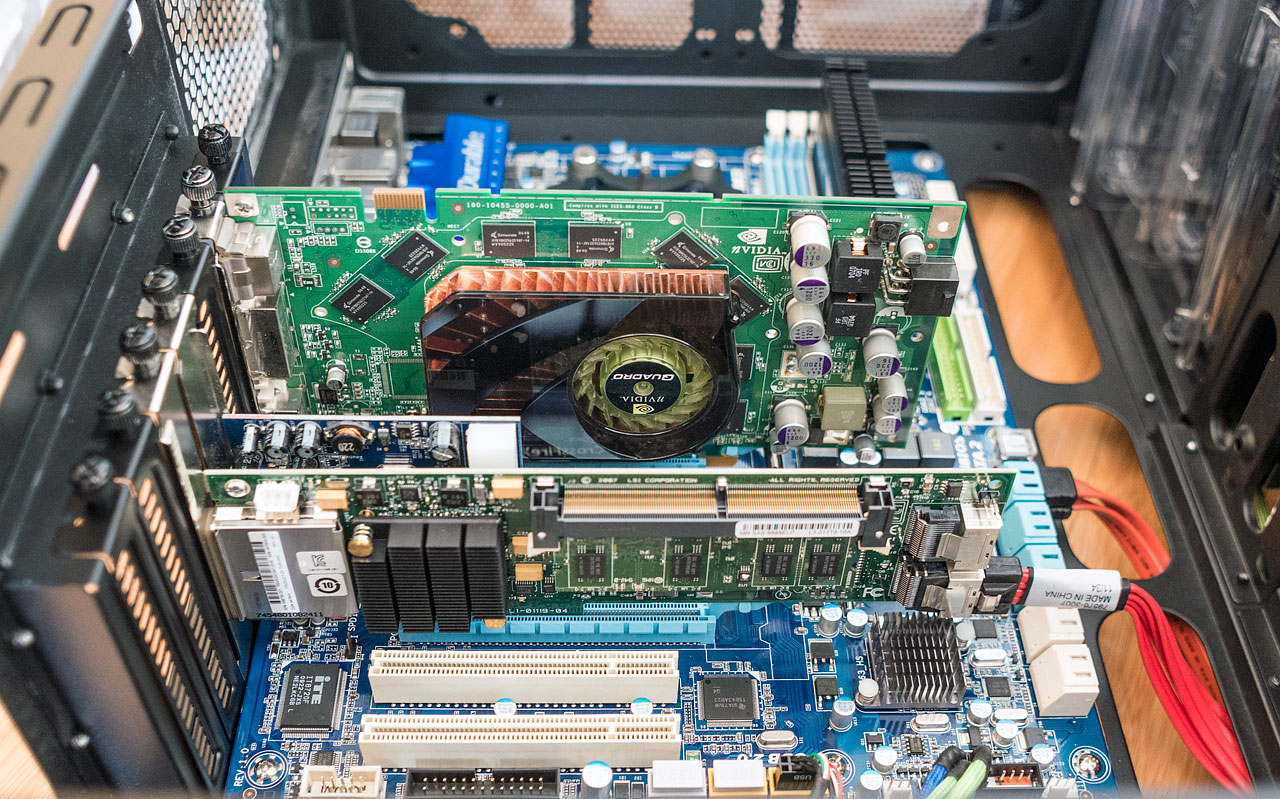

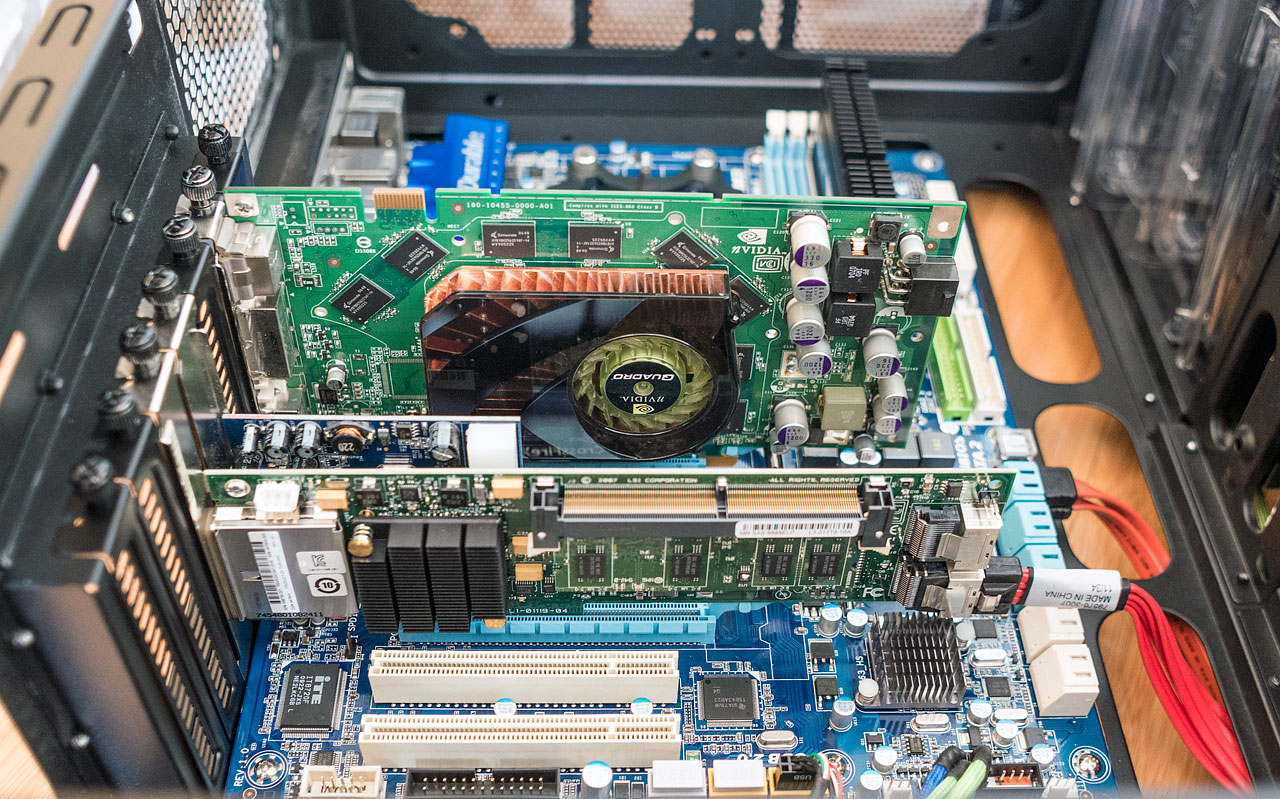

Nvidia Quadro FX3500

LSI MegaRaid SAS 8888ELP

Delta 825W PSU

Belkin USB 3.0 card

Prolimatech Megahalems

Coolermaster CM690-II

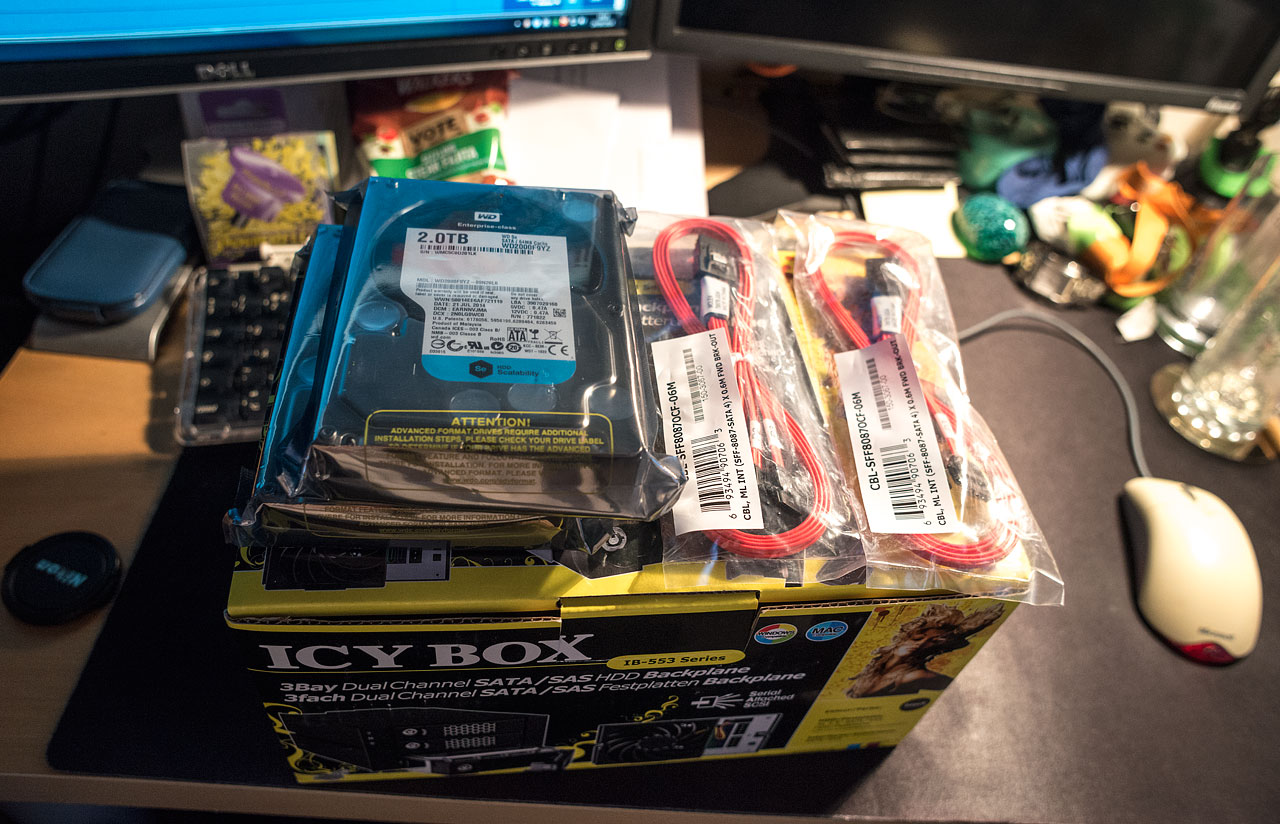

Hard drive wise, at present I have a load of different disks ranging from 500GB to 1TB. (8x 500GB, 2x 640GB and a 1TB ) I reckon 8x 2TB drives on the HBA in RAID 5 (maybe 6) should suffice for main storage. (may start out with 4 due to cost and expand later) I can then use the 4x 500GB seagate constellation ES2 drives I already have in a RAID 10 array using the onboard sata for the OS giving 1TB mirrored, which should fit within the non EFI constraints for bootup. I'll need to get two ICYBOX backplanes that fit 3x 3.5" drives into 2x 5.25" bays. I'm hoping to use ubuntu server 14.04 on it too, not sure on the file system type yet though.

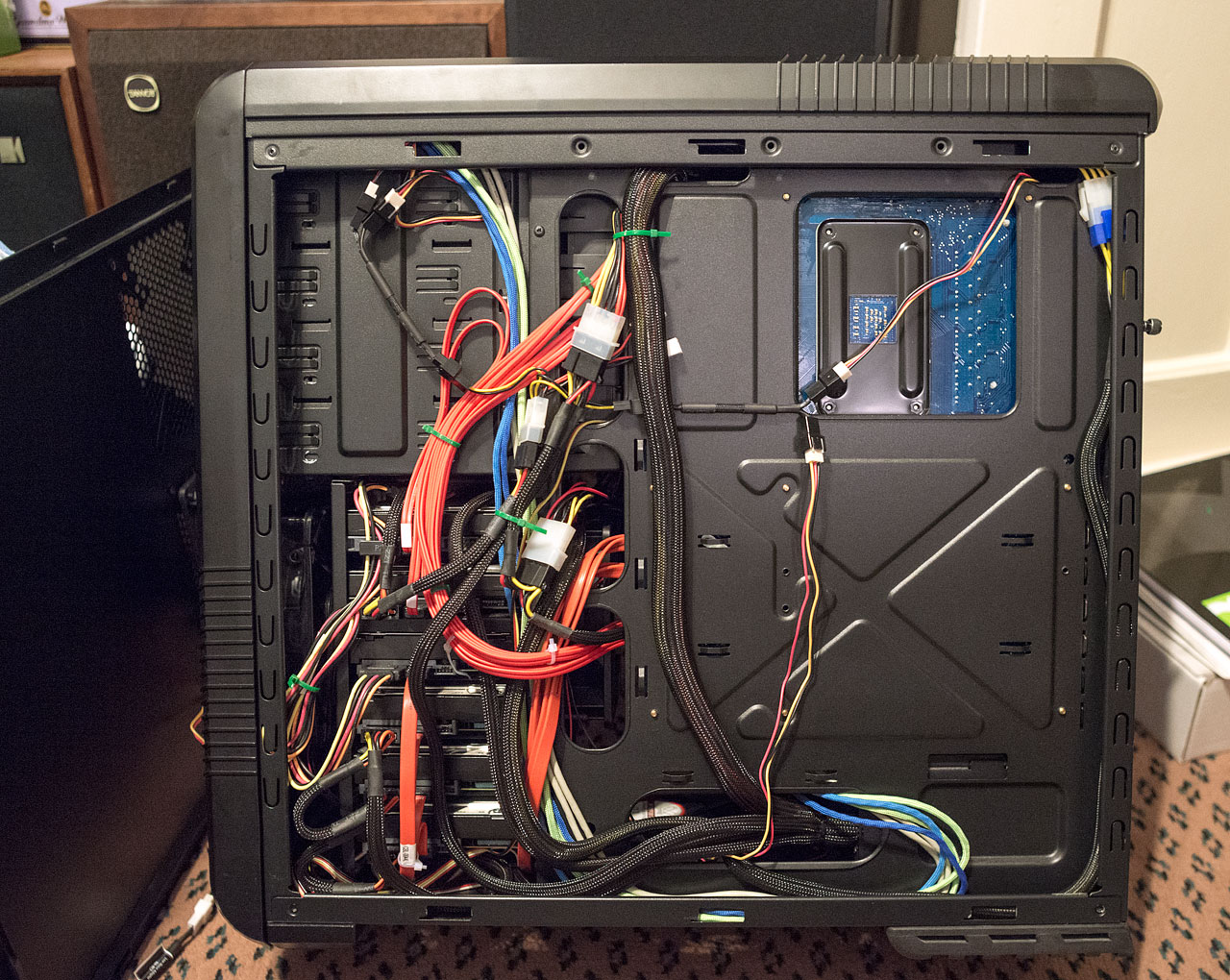

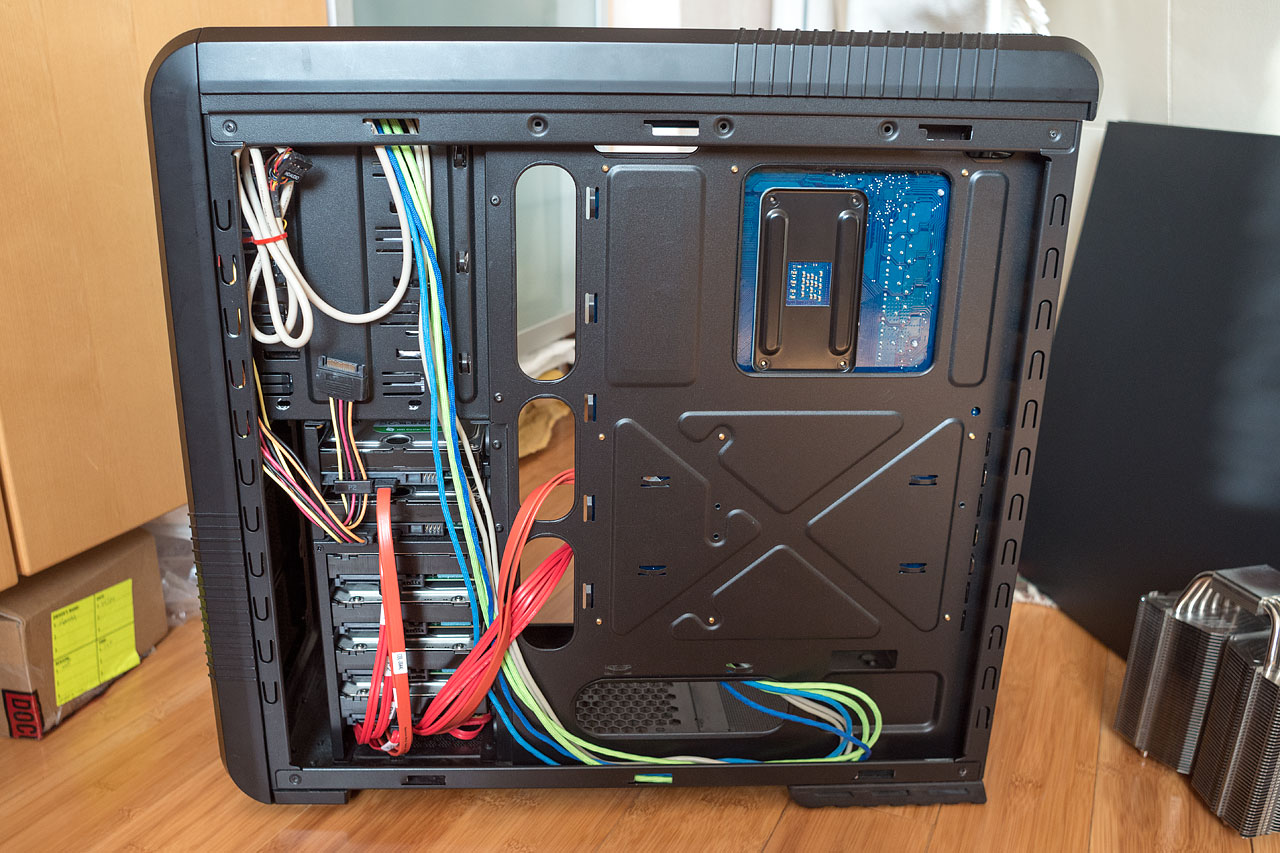

Trying to keep it reasonably neat, though i imagine cable chaos will ensue once I have all 12 disks fitted.

My old phenom II x4 955 which I bought back in 2008 and ran at 3.7GHz right up until january this year has a new lease of life. I will probably drop the clocks back down and see how low i can drop the voltage. (being an old C2 stepping it liked lots of volts) Get the feeling it'll be overkill for the intended purpose.

An HP branded LSI MegaRAID 8888ELP 8 port SAS HBA and an nvidia quadro FX3500. (again probably too powerful for the purpose)

Tried the PSU in the phanteks and it doesn't fit either. (even though the bolt locations are ATX, the physical size is WTX and is significantly bigger than ATX. Nicely made supply though.

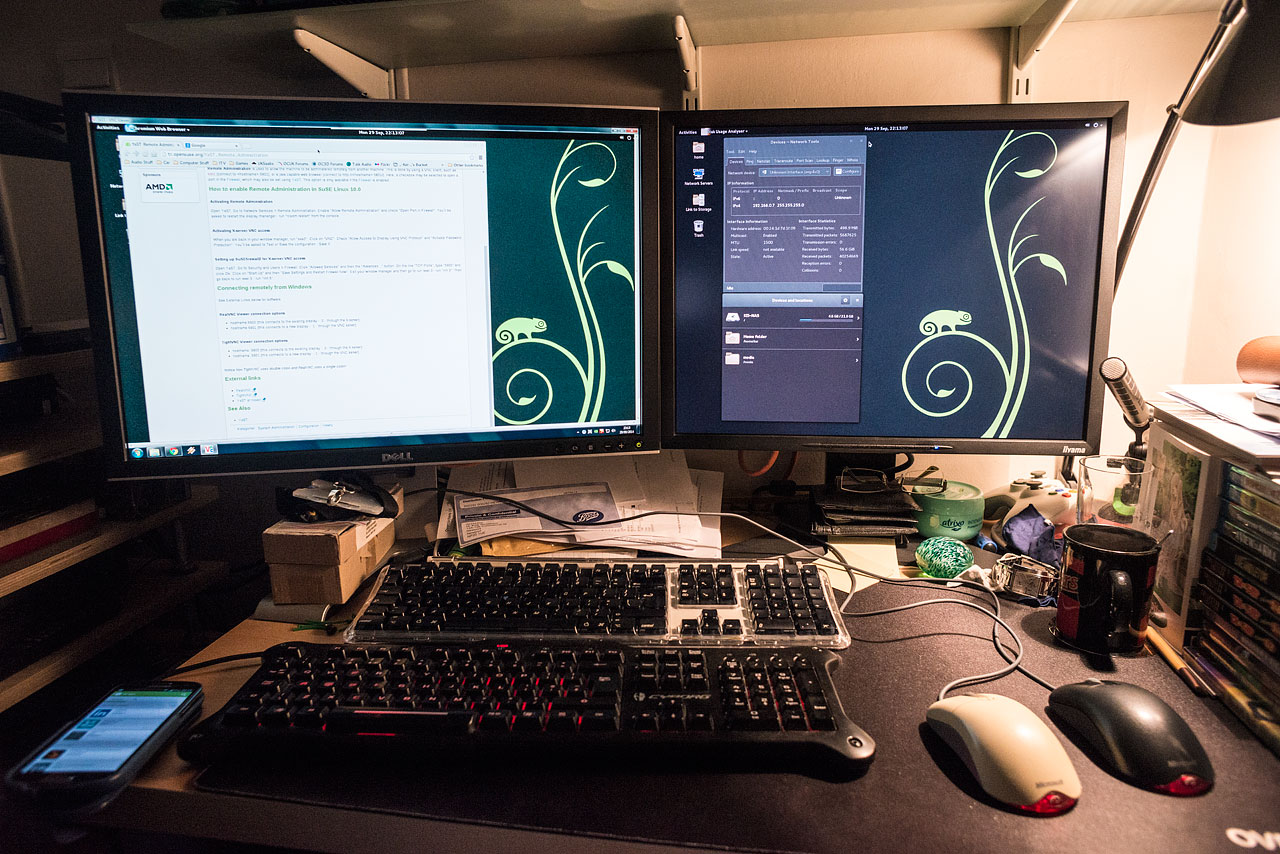

Unfortunately upon testing, I have not been able to get any life from it, just powers on and sits there gpu fan spinning full tilt. Tried some basic bits like resetting the cmos, trying one memory module at a time and no drives connected, but no luck. So far I've found that the GPU, RAID card and memory is all still working perfectly. That leaves the psu, motherboard and processor. Though the motherboard, processor and ram were all working happily together before which leaves me with major doubts about the psu. I'll need to pull the psu out of the other pc to test tonight. If it is where the fault lies, I'll have to buy a new psu. (I have a sneaking suspicion that WTX pinout differs from ATX)

Gigabyte MA-790FXT-UD5P

AMD Phenom II X4 955

Corsair Dominator 4GB 1600

Nvidia Quadro FX3500

LSI MegaRaid SAS 8888ELP

Delta 825W PSU

Belkin USB 3.0 card

Prolimatech Megahalems

Coolermaster CM690-II

Hard drive wise, at present I have a load of different disks ranging from 500GB to 1TB. (8x 500GB, 2x 640GB and a 1TB ) I reckon 8x 2TB drives on the HBA in RAID 5 (maybe 6) should suffice for main storage. (may start out with 4 due to cost and expand later) I can then use the 4x 500GB seagate constellation ES2 drives I already have in a RAID 10 array using the onboard sata for the OS giving 1TB mirrored, which should fit within the non EFI constraints for bootup. I'll need to get two ICYBOX backplanes that fit 3x 3.5" drives into 2x 5.25" bays. I'm hoping to use ubuntu server 14.04 on it too, not sure on the file system type yet though.

Trying to keep it reasonably neat, though i imagine cable chaos will ensue once I have all 12 disks fitted.

My old phenom II x4 955 which I bought back in 2008 and ran at 3.7GHz right up until january this year has a new lease of life. I will probably drop the clocks back down and see how low i can drop the voltage. (being an old C2 stepping it liked lots of volts) Get the feeling it'll be overkill for the intended purpose.

An HP branded LSI MegaRAID 8888ELP 8 port SAS HBA and an nvidia quadro FX3500. (again probably too powerful for the purpose)

Tried the PSU in the phanteks and it doesn't fit either. (even though the bolt locations are ATX, the physical size is WTX and is significantly bigger than ATX. Nicely made supply though.

Unfortunately upon testing, I have not been able to get any life from it, just powers on and sits there gpu fan spinning full tilt. Tried some basic bits like resetting the cmos, trying one memory module at a time and no drives connected, but no luck. So far I've found that the GPU, RAID card and memory is all still working perfectly. That leaves the psu, motherboard and processor. Though the motherboard, processor and ram were all working happily together before which leaves me with major doubts about the psu. I'll need to pull the psu out of the other pc to test tonight. If it is where the fault lies, I'll have to buy a new psu. (I have a sneaking suspicion that WTX pinout differs from ATX)

Last edited: