NeverBackDown

AMD Enthusiast

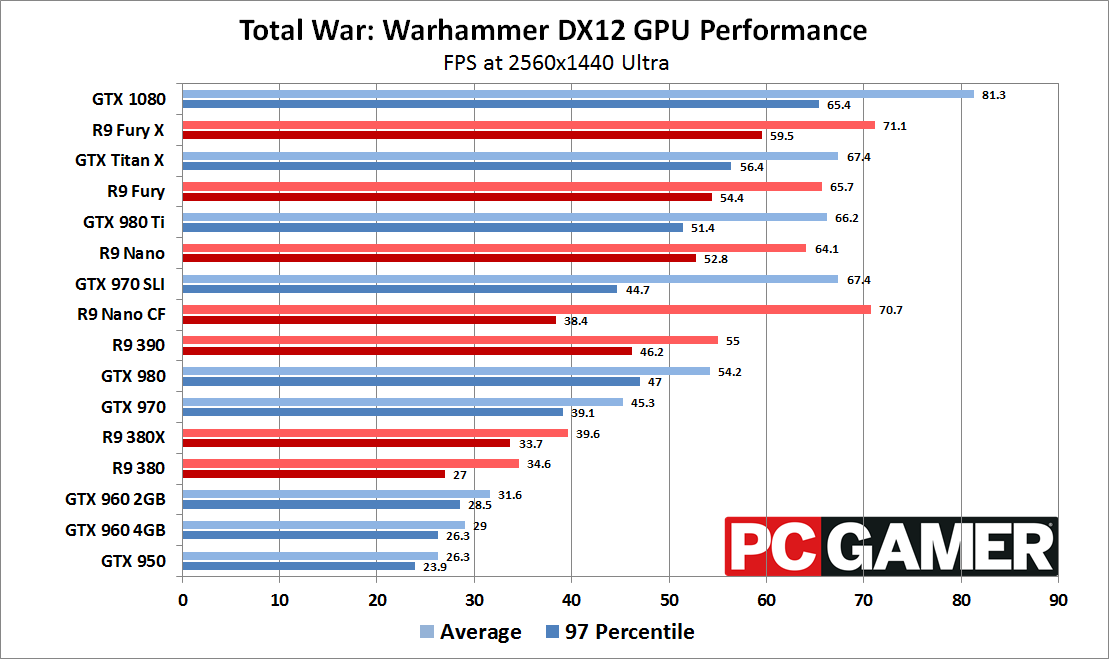

Same argument could be said regarding double point floating precision, we know AMD excel at it and Nvidia struggles, then we could also bring up Intels ability to leap forward with much higher IPCs than AMD.. Each manufacturer has there strengths and weaknesses.

Yes but people were expecting huge advances from Nvidia regarding DX12 and Async Compute. This and other reviews show it's not the case and Nvidia more than likely won't support it on a hardware level in the future, which really hurts them tbh. DX12 isn't going away and no amount Pre-emption or Dynamic loading can beat Concurrent processing. I wasn't dismissing Nvidia, just getting the info out, it's a quick news thread after all