Hey everyone. :hello:

I've been contemplating between getting an r9 290x or the 780 ti for my build. It is a fairly high end build with the 4770k and 16 GB of 2400 MHz RAM and I will use it for gaming, some music composition and art type things including starting 3d rendering, photoshopping and drawing.

My arguments for and against are:

-780 ti is more costly than 290x (for me about $730 compared to $900)

-780 ti seems to generally have the higher framerate/better benchmarks, however gap is closing with non-reference 290x

-290x has more memory which may be useful for rendering at higher resolutions

- If mantle kicks off, 290x could be close to or even better than 780 ti in those mantle supported programs/games

-Mantle may allow 290x to be a longer lasting card (more efficiently used) and also may make 780 ti previous gen and 'old' because it does not support mantle

At the moment, I believe there is probably a high chance of mantle kicking off seeing articles where EA look like they are including it in their frostbite engine, as well as Mantle making games easier to port across consoles. (Link to some of this information: http://www.dailyfinance.com/2013/11/25/amds-shift-is-beginning-to-pay-off/)

Now, with the comparison against the cards, according to one review, (link removed) the 780 ti constantly beats the 290x with asus non-reference cards:

(fps)

290x 780ti

Battlefield 83 95.3

Crysis 3 36.9 42.6

Metro Last Light 48 53.7

Unigen Valley 63.8 72.5

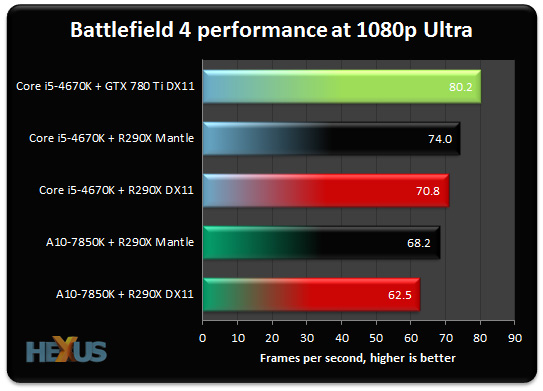

However, in comparisons where mantle is definitely used:

Battlefield 4: 290x with mantle - 130 fps 780ti - 145 fps

Which seems quite odd when compared with other comparisons:

Singleplayer Battlefield 4: 290x with mantle - 62.7 fps 780ti - 62.1 fps

multiplayer Battlefield 4: 290x with mantle - 83.9 fps 780ti - 70.2 fps

This conflicting information is fairly confusing, and I'm not sure with nvidia have just not optimised their card for battlefield 4, so it performs worse on that game compared to others. This will make the comparison slightly unfair and will result in the 290x looking better in the comparison than it will actually be across the board.

Anyway, with all this information, I really do not know what to think or what to buy. I would really appreciate other people's opinions on this and which GPU I should purchase.

Thanks!

I've been contemplating between getting an r9 290x or the 780 ti for my build. It is a fairly high end build with the 4770k and 16 GB of 2400 MHz RAM and I will use it for gaming, some music composition and art type things including starting 3d rendering, photoshopping and drawing.

My arguments for and against are:

-780 ti is more costly than 290x (for me about $730 compared to $900)

-780 ti seems to generally have the higher framerate/better benchmarks, however gap is closing with non-reference 290x

-290x has more memory which may be useful for rendering at higher resolutions

- If mantle kicks off, 290x could be close to or even better than 780 ti in those mantle supported programs/games

-Mantle may allow 290x to be a longer lasting card (more efficiently used) and also may make 780 ti previous gen and 'old' because it does not support mantle

At the moment, I believe there is probably a high chance of mantle kicking off seeing articles where EA look like they are including it in their frostbite engine, as well as Mantle making games easier to port across consoles. (Link to some of this information: http://www.dailyfinance.com/2013/11/25/amds-shift-is-beginning-to-pay-off/)

Now, with the comparison against the cards, according to one review, (link removed) the 780 ti constantly beats the 290x with asus non-reference cards:

(fps)

290x 780ti

Battlefield 83 95.3

Crysis 3 36.9 42.6

Metro Last Light 48 53.7

Unigen Valley 63.8 72.5

However, in comparisons where mantle is definitely used:

Battlefield 4: 290x with mantle - 130 fps 780ti - 145 fps

Which seems quite odd when compared with other comparisons:

Singleplayer Battlefield 4: 290x with mantle - 62.7 fps 780ti - 62.1 fps

multiplayer Battlefield 4: 290x with mantle - 83.9 fps 780ti - 70.2 fps

This conflicting information is fairly confusing, and I'm not sure with nvidia have just not optimised their card for battlefield 4, so it performs worse on that game compared to others. This will make the comparison slightly unfair and will result in the 290x looking better in the comparison than it will actually be across the board.

Anyway, with all this information, I really do not know what to think or what to buy. I would really appreciate other people's opinions on this and which GPU I should purchase.

Thanks!

Last edited by a moderator: