You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

OverKILL3D ASUS Matrix Platinum GTX980 4 Way SLI Review

- Thread starter tinytomlogan

- Start date

NeverBackDown

AMD Enthusiast

It's disappointing to see how so many AAA titles don't support Quad SLI or even SLI setups...

Not really. Its very hard to get just one GPU to work 100% as all the limitations of Dx and AFR. So adding more won't solve that problem but make it more complicated.

Mysterae

New member

Not really. Its very hard to get just one GPU to work 100% as all the limitations of Dx and AFR. So adding more won't solve that problem but make it more complicated.

I disagree, it damn disappointing. Considering how much AMD and Nvidia rake in from folks who buy multiple graphics cards never mind one, they should make it a priority to ensure buyer's investment are worthwhile, demonstrate this which will propagate more sales. It's a bit complicated? Oh boo fucking hoo!

Yes, someone shat in my muesli this morning.

NeverBackDown

AMD Enthusiast

I disagree, it damn disappointing. Considering how much AMD and Nvidia rake in from folks who buy multiple graphics cards never mind one, they should make it a priority to ensure buyer's investment are worthwhile, demonstrate this which will propagate more sales. It's a bit complicated? Oh boo fucking hoo!

Yes, someone shat in my muesli this morning.

Boo fucking hoo huh? Well its not AMD or Nvidias fault, so continue to blame them if you will. Its an API limitation along with the AFR technique that is being used. Adding more on top of something that is already complicated x4(4way SLI/Xfire) and the fact they do work and provide some benefit is amazing. So disagree all you want but it's not their fault, drivers can't fix it. Have you not been around the news lately? i said this in my previous post.. guess you only read what you want?

The very strong push towards modern APIs.. You know like DX12/Vulkan and the previously EOL Mantle(basis for Vulkan now).. Should help alleviate these issues but it won't be for a few years simply because new engines will need to be entirely built with DX12 and not patched in to support it. But thats a different matter.

Merxe

New member

Not really. Its very hard to get just one GPU to work 100% as all the limitations of Dx and AFR. So adding more won't solve that problem but make it more complicated.

So in the end, if a game runs like crap (e.g. AC Unity) it's always the fault of the devs that they screwed up the optimization?

I always thought that if a game runs well on SLi it will run even better with 3 or 4 cards. From the tests here it seems like some games go with that rule and some don't (funny that many Ubisoft titles don't...). Is it the outdated engines that make it hard for the devs to support more cards?

All companies use different engines, so for example games made using unreal engine will probably benefit every time they update their core code and the games under it release a patch. Arma 3 is an example where it took the devs a while before they used sli properly.

I did read something recently where they were working on something which made the system share its resources making it easier to dev for...but i may have dreamt that :-D

I did read something recently where they were working on something which made the system share its resources making it easier to dev for...but i may have dreamt that :-D

NeverBackDown

AMD Enthusiast

So in the end, if a game runs like crap (e.g. AC Unity) it's always the fault of the devs that they screwed up the optimization?

I always thought that if a game runs well on SLi it will run even better with 3 or 4 cards. From the tests here it seems like some games go with that rule and some don't (funny that many Ubisoft titles don't...). Is it the outdated engines that make it hard for the devs to support more cards?

Not at all, devs can only do so much. Though in some cases yes it would be just lack of optimization, though i doubt it's single handedly there fault.. i'm more apt to blame publishers as they are the ones calling the shots. I'm just stating that with current Dx(and previous Dx APIs) and the use of AFR it's rather complicated to get the engine to make SLI/Xfire difficult. Newer engines are better but it would need to built in and not patched in. Now some games are the outliers here(for example CoH2, refuses more than one card) but some games do benefit. But the thing is only some do and not all. It's just complicated, now don't get me wrong its doable but like i said time consuming and when you are on a time and money budget It all counts. Now with DX12 on the way it should really make it easier on some level. SPS would know more about that but the direction DX12 is going based off what we know i think it's safe to assume it would be easier to implement. As long as the engine is built with DX12 in mind, as that according to many sources that is the only way you can get the full benefit of it.

AlienALX

Well-known member

It's very, very rare that a game gets SLI or CFX support at a low level in the code. Devs are lazy and cheap, it costs money to code support.

It's usually left down to Nvidia and AMD to get the scaling right. Different engines and different code needs to be scaled differently and YMMV.

There are only two to three ways scaling can work, so if that doesn't work you've had it (see also every UBI game ever).

AMD are putting the ability to change it yourself back into the driver. There used to be a tool that allowed you to change profiles, now the drivers will be going back to the older style and allowing you to change it yourself in the hope it can get the profile working quicker. However, AMD state that sometimes they can add other optimisations that this driver tool won't let you change, offering up even more performance.

As an example here. If a game dev made a game for SLI only then it would not work properly on one card. As such they would need to sell different versions of the game. It's just not economical.

All is not lost however. New APIs like DX12 and Pascal allow GPUs to talk to one another at a low level so according to them you will be able to run as many as you like, different makes and even models and they'll all scale..

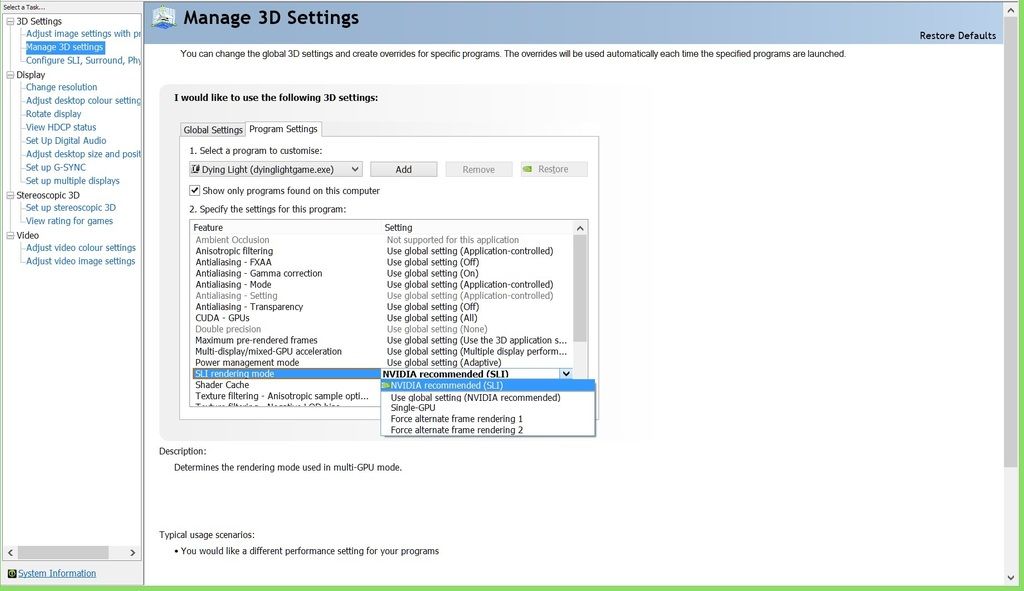

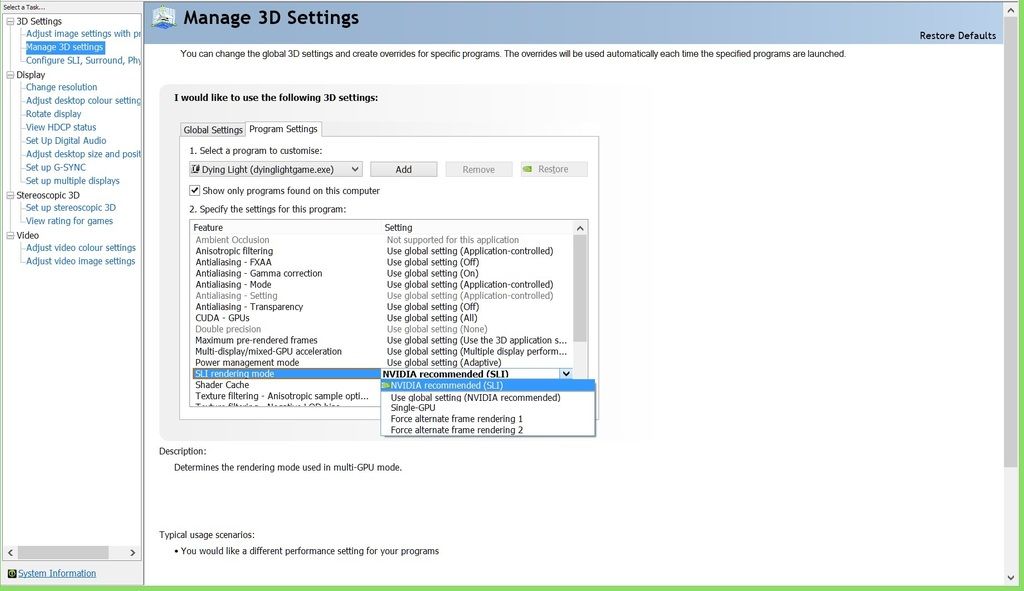

Nvidia offer you the profile changing option in their drivers now.

It's usually left down to Nvidia and AMD to get the scaling right. Different engines and different code needs to be scaled differently and YMMV.

There are only two to three ways scaling can work, so if that doesn't work you've had it (see also every UBI game ever).

AMD are putting the ability to change it yourself back into the driver. There used to be a tool that allowed you to change profiles, now the drivers will be going back to the older style and allowing you to change it yourself in the hope it can get the profile working quicker. However, AMD state that sometimes they can add other optimisations that this driver tool won't let you change, offering up even more performance.

As an example here. If a game dev made a game for SLI only then it would not work properly on one card. As such they would need to sell different versions of the game. It's just not economical.

All is not lost however. New APIs like DX12 and Pascal allow GPUs to talk to one another at a low level so according to them you will be able to run as many as you like, different makes and even models and they'll all scale..

Nvidia offer you the profile changing option in their drivers now.

Similar threads

- Replies

- 9

- Views

- 4K