Look folks; there is clearly a reason why most high-end cards are using MLCCs for at least their central capacitors.

If de8auer got an extra 30-40 MHz, good for him, but when these GPUs can go over 2000MHz, that is a difference of less than 2%. You will not notice that difference in games.

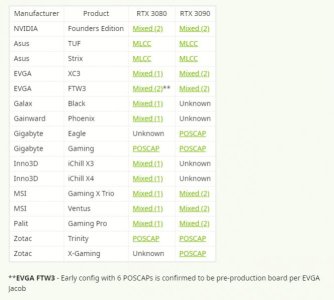

Remember that Gigabyte's Gaming OC and Eagle OC models are not their premium releases and that non-premium models are designed to run at their listed specs, not for overclocking.

The main point here is that configurations like Gigabyte's Gaming OC and Eagle OC work.

I'm going to watch the der8auer video now, but I am curious if his testing used Nvidia's latest drivers. If not, would that 30-40MHz gap exist if it were using those drivers?