Interesting that yesterday there were warnings that the 9070s had problems with memory temps (Wccftech). There was also something on Threads about excess power usage and lack of efficiency. Tomshardware was complaining that the FSR not being up to Dlss quality. So that is 2 sites and an influencer spreading FUD on the day before launch. Cards marked.

I watched Tom first, then Tech-Jesus, half paid attention to Jay2c and there is serious gaslighting going on if I'm looking at the spread of results across the vast majority of games. When they start calling out percentages in single / low double digits then look closely at the graph. A card that scores 33fps is 10% faster than a card that scores 30fps, in reality, it means f all and could have cost you 750 of your favourite currency.

First of all you have to remove the x090s as anyone looking a 9070 series can't or won't pay for a 1500-3000 card. 5080s sit in the 1200-1500 bracket, again not an option, even if you can find one.

That leaves the prev gen 7900xt(x)s, 5070(ti)s, 4080s and 4070s all in the same ballpark. Ray Tracing and AI inferencing is where the development bucks are being spent, f all improvement in Raster gen on gen and if measured on raster only across a selection of 50 AA(A) games with outliers removed, I suspect that 10% covers all of them. Soooo...

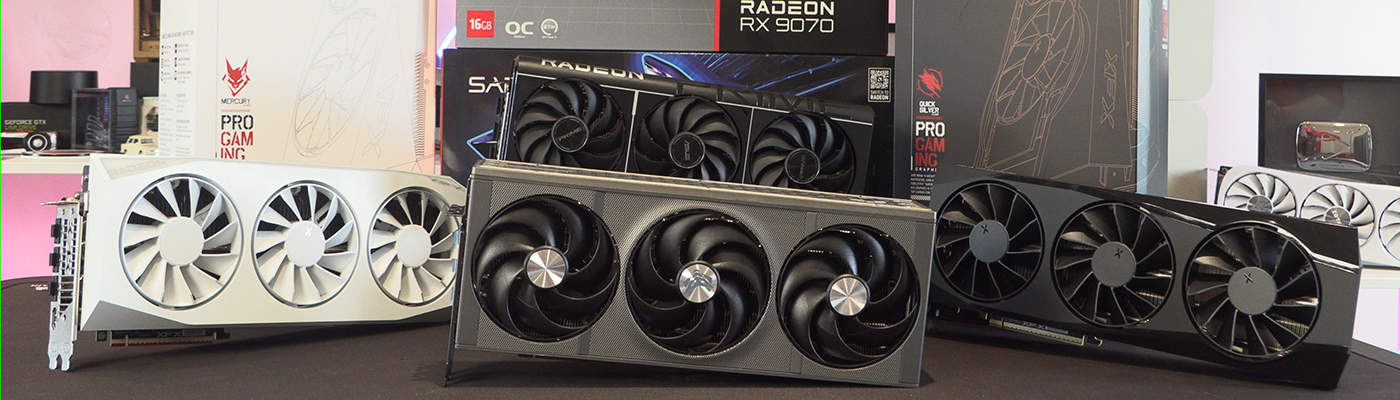

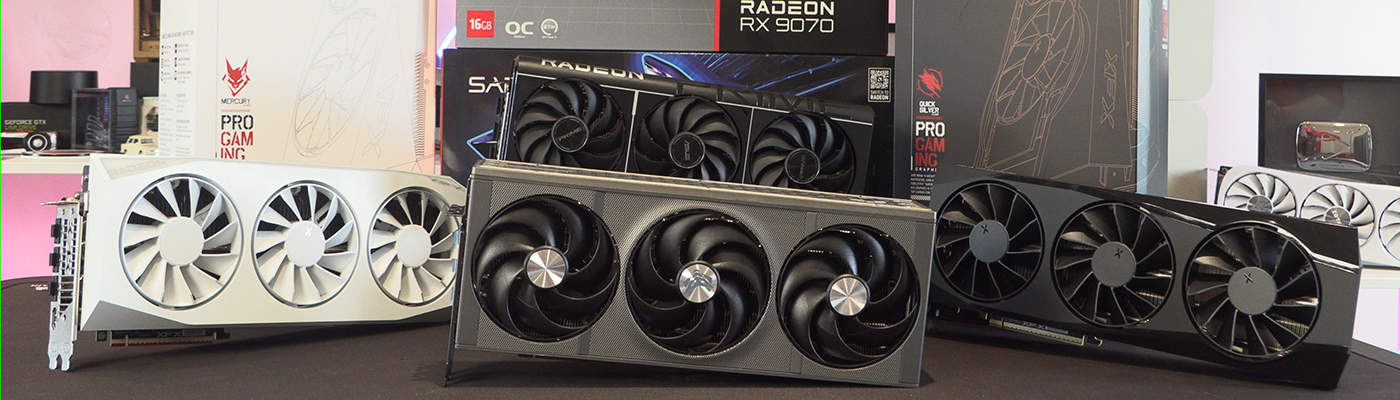

If you're a RT fan with 1000-1200 burning a hole in your pocket, get a 5070ti, if you're into AI inferencing get a 16GB Nvidia card or for 50% of the performance for 50% of the cost get a 9070XT. Raster fan? bang for buck seems to be the 9070 which has killed the Nvidia 070 market as priced yesterday.

Being an optimist, I'm seeing 25% less cost for better performance gen on gen AMD side with a good uptick in AI / RT performance. Too early to tell if it will adjust the marketplace but it can only help.