WYP

News Guru

It's finally here! Here's the GPU's pricing and specs.

Read more about Nvidia's RTX 2060 graphics card.

Read more about Nvidia's RTX 2060 graphics card.

Last edited:

"better performance than the 1080 Ti" he said lol!! I must have transfered universes in my sleep... Such marketing. I'm always iffy towards his keynotes.

Yeah, somebody needs to follow him with a speech bubble that says (in specific use cases).

I remember him comparing it to a GTX 1070 Ti, but I don't remember the GTX 1080 Ti comparison.

About the only thing I hate about this card? No Nvlink which could have given it a fighting chance in RT based games. I suspect the 1160 will be better value.

It does leave me confused why.. Unless performance vs value would make a 2060 SLI more worth while than a 2070 single GPU or maybe even 2080?

I would have thought Nvidia would like to entice people to double up on their cards.

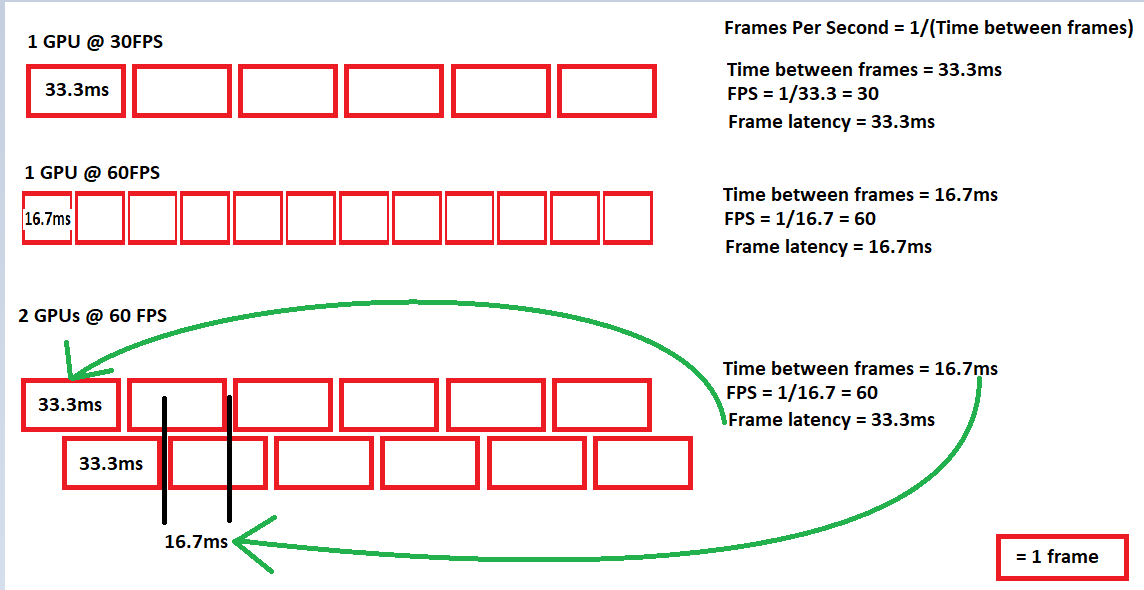

Currently a single 2060 is plenty for 1080p/1440p, for 4K 6Gb VRAM isn't exactly ideal. 2060 SLI serves no real purpose in gaming, outside high refresh rate 1440p but then you'd ideally want a single powerful GPU for better frame pacing.

In the future the extra computational oomph might come in handy at lower resolutions, but at that point the next gen cards are the better option.

I might be overly critical since my 760 SLI setup was... less than stellar.

Huh, never thought about it that way but it's pretty obvious when you point it out.The time between frames obviously halves if you double the frame rate, but the time taken to render frames (The contributing factor to input lag) only halves if you double the frame rate by producing frames in half the time (Obviously), which SLI doesn't do, it takes two cards producing frames at half the rate they're displayed by rendering them alternately.

Well well... Seems Gamersnexus bench marked this card with Battlefield V at 1080p using RTX OFF/low/high and the results as far as FPS go were 104, 66 and 55 FPS on average respectively.

For a 2060 card, that is a pretty good performer in my eyes,